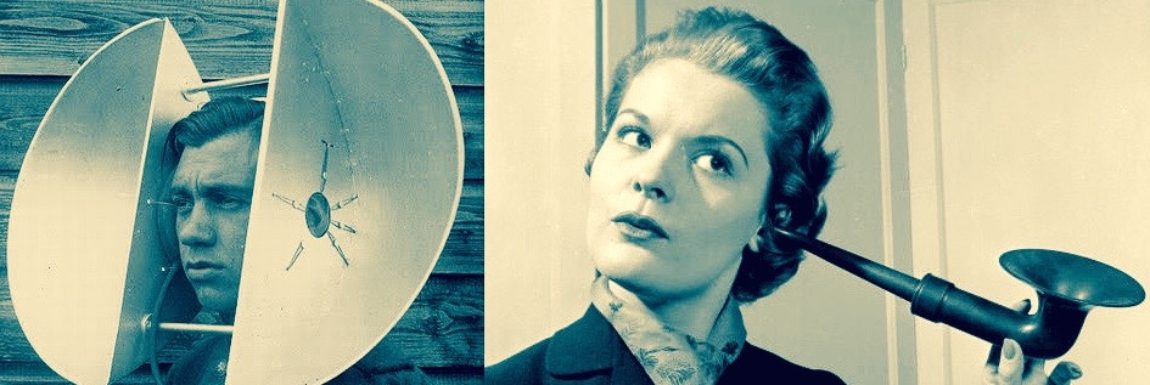

Achin Bhowmik calls it “soup”, his apt analogy for a noisy environment such as a restaurant or cocktail party.

Starkey’s Chief Technology Officer and Executive VP of Engineering has announced a breakthrough in AI research that should lead to hearing aids that can sift through that soup and pick out what we want to hear.

To understand his analogy, imagine you’re in a restaurant and all around you there is the clatter of dishes, the murmur of conversations across the room and piped-in background music. It all becomes one big bowl of acoustic soup with too many ingredients.

All you really want to hear is the person across the table from you but their voice is lost in that soup. Starkey’s research has demonstrated that AI is capable of picking out the nuggets that make up your companion’s voice, eliminating the rest, and then delivering their voice clearly to your ears without all of the hubbub.

That’s the promise of Bhowmik and his team’s research. I sat down with him at Starkey’s Innovation Expo to learn more. We began by reviewing what hearing aids can do now and then looked ahead to the future.

The biggest frustration for most of us with hearing loss is understanding speech, particularly in noisy environments such as restaurants. How are you addressing that now?

I do realize that speech discrimination is what patients struggle with the most. Particularly people with severe to profound hearing loss. Grandparents want to understand what their grandkids are talking about and just amplifying sound is not helping them. So a lot of the technology is to help speech discrimination using directionality and noise reduction.

That’s the classic technology that people are using today.

Our new Livio Edge AI hearing aids go a step further and can instantly capture an acoustic “snapshot” of the environment and then feed it to the AI system which responds with the optimal settings for that particular acoustic environment.

So when when you’re in a noisy restaurant, for example, restaurant mode kicks in. It will increase amplification, but not amplify background noise, and it will have directionality, knowing that you probably want to listen to the person who’s in front of you.

So these are all techniques that we combine together to do as much as we can.

You have announced that Starkey is getting ready to go beyond those techniques by using Artificial Intelligence. In fact, you call it a real breakthrough.

What we are doing is we have built up an AI deep neural network that dips into what I call the “acoustic sound soup” that no one knows what’s in there. But our deep neural network is able to discriminate and pick out the parts of that “soup” that are speech. That’s what the human brain does, right?

When we are in a noisy restaurant, our brain is trying to decipher which part of the soup is speech. But unfortunately, if we have severe to profound hearing loss, the signal coming in is not enough for the brain to do that job. It doesn’t get enough information to do that function anymore.

So now the way we are trying to solve that problem is I believe at the cutting edge of AI. We are training the AI with a lot of annotated speech in a complex acoustic sound soup so that it can do what the human brain was designed to do which is to discriminate speech from the other acoustics. And what we’re finding out is, yes we can.

It’s really, really interesting because then we can take those speech components and separate them from the rest of the acoustic environment and we can process it, amplify it and deliver only the speech to the ear.

But to be completely open about the current state of the technology: Since the acoustics have to be sent to this deep neural network to get processed, improved, and then sent to the hearing aid speaker, there is going to be a delay.

There is going to be tens of milliseconds, maybe even closer to a hundred millisecond delay, which would probably be unacceptable to people with no hearing loss, or mild hearing loss.

But for people with profound hearing loss, they are saying this is a godsend, this is great!

Thanks Achin, that’s very encouraging news.

Wow. That’s wonderful. Just a comment about how my hearing has improved.I have hearing loss in just my right ear so I tried ozone and it worked somewhat. A tube is inserted into the ear for just a minute and ozone is pumped in. Voila – after two treatments, people don’t sound like Donald Duck on the phone anymore and I am much better in restaurants.